Strings and codepages or "The Unicode Advantage"

This article is a copy of the article on the X# website, written by Robert v.d.Hulst.

Introduction

We have been quite busy creating the X# runtime. And when we did that we stumbled on the problem of bytes, characters, codepages, Ansi and Unicode. X# has to deal with all of that and that can become quite complicated. That is why we decided to write a blob post about this. In this post we look back to explain the problems from the past and how the solutions to these problems are still relevant for us in a Unicode XBase environment such as X#.

Bytes and Characters in DOS

When IBM introduced Dos in the 80’s the computing world was much less complex than it is right now. Computers were rarely connected with a network and certainly not with computers outside the same office building or even outside the same city or country. Nowadays all our computers are connected through the internet and that brings new challenges for handling multiple languages with different character sets.

The origin of the XBase language was in CP/M (even before DOS) and the XBase runtimes and fileformats have evolved over time taking into account these changes.

Before IBM sold computers they were big in the typewriter industry. So they were well aware that there are different languages in the world with different character sets. Their IBM typewriters came in different versions with a different keyboard layout and different “ball” with characters. There were different balls with different character sets and different balls with fonts (Courier and Prestige were the most used, with 10 and 12 characters per inch).

DOS used a single byte (8 bits) to represent characters. So there was a limited number of characters available. The number was certainly not big enough to represent all characters used by languages for which IBM was developing computers.

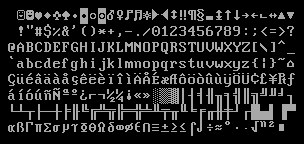

That is why IBM decided to group characters in so called Code Pages. The most common codepage used in the US and many other countries in the world was code page 437.

This codepage contains all non-accented Latin characters as well as several characters with accents, several (but not all) Greek characters, the inverted exclamation mark and question mark used in Spanish and quite some line draw characters, used to draw different boxes on the 25 x 80 DOS displays.

This codepage contains all non-accented Latin characters as well as several characters with accents, several (but not all) Greek characters, the inverted exclamation mark and question mark used in Spanish and quite some line draw characters, used to draw different boxes on the 25 x 80 DOS displays.

Unfortunately this was not enough.

For many western European languages accented and special characters were missing, so for these countries the codepage 850 was used.

If you compare this codepage with 437 you will see that the first half (0-127) are identical. The top half contains some differences.

As developer you had to be aware of the codepage your client was using, because sometimes the carefully crafted boxes looked terrible if a customer was using codepage 850. A linedraw character would be replaced with an accented character :(.

Outside western europe this still was not enough. My Greek colleagues could not work with codepage 850 because many Greek characters are missing.

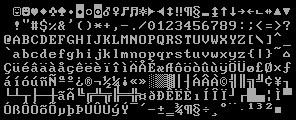

Several Greek codepages were invented, such as codepage 851. The last one that I know about is codepage 737:

If you compare this codepage with 437 you will see that the first half (0-127) are identical. The top half contains some differences.

As developer you had to be aware of the codepage your client was using, because sometimes the carefully crafted boxes looked terrible if a customer was using codepage 850. A linedraw character would be replaced with an accented character :(.

Outside western europe this still was not enough. My Greek colleagues could not work with codepage 850 because many Greek characters are missing.

Several Greek codepages were invented, such as codepage 851. The last one that I know about is codepage 737:

And on other platforms (such as main frames, apple computers, unix etc) there are also codepages. Wikipedia has a page full just with the list of codepages: en.wikipedia.org/wiki/Code_page. And sometimes the same code page has different names, depending on who you talked with <g>.

And on other platforms (such as main frames, apple computers, unix etc) there are also codepages. Wikipedia has a page full just with the list of codepages: en.wikipedia.org/wiki/Code_page. And sometimes the same code page has different names, depending on who you talked with <g>.

The reason that I am bringing this up now, 40 years later, is that this was the situation in which XBase was born and in which the first Clipper programs were running. DBFs created with Clipper would store 8 bit characters in files and the active code page on the machine that the application was running on would give these characters a meaning. So the same byte 130 in a file would be represented as an accented E (é ) on a machine with codepage 437 or 850, but as a capital Gamma (Γ) on a Greek computer running codepage 737.

So there is a difference between bytes and characters, even in the DOS world.

The codepage gives the byte a “meaning”. The byte itself has no meaning. There is a 'contract' that says that the number 65 in a field with a string value is the character 'A'. But in reality it is just a number. And why are the lower case characters exactly 32 positions later in the table as the uppercase characters? That was simply convenient so with a simple AND or OR operation characters could be converted to upper or lower case.

Sorting

Also some rules had to be established on how to sort these characters and how to convert characters from uppercase to lowercase. DOS did not have built-in support for sorting characters, so each developer had to create his own routine. Clipper used so called nation modules (ntxfin.obj for Finnish, ntxgr851.obj for Greek with codepage 851 etc.) . Each of these modules contained 3 tables: a table to convert lowercase to uppercase, a table for the uppercase to lowercase and finally a table that defines the relative sort weight for each character. This last table could position the accented 'é' (130) between 'e' and 'f' for example by giving it a weight greater than ‘e’ and smaller than ‘f’. But of course for Greek in codepage 737 that would be completely useless since 130 then represents the Capital Gamma (Γ). Even between 2 Greek codepages there could be complications. The original Greek codepage 851 had the capital Gamma at 166 where codepage 737 put it at the location 130.

Anyway this all worked, as long as you used one machine or one network with machines with the same setup.

Moving from DOS to Windows

And then Windows came and we started to use Visual Objects.

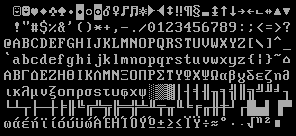

Microsoft decided that Windows would be using again different codepages. So they introduced the distinction between OEM Codepages and ANSI codepages. The original 437, 850 etc. were labeled as OEM codepages. Microsoft introduced new windows codepages such as 1252 (ANSI Latin ) and 1253 (ANSI Greek).

The biggest change compared to the original codepages in DOS was that the line draw characters were no longer there, which makes sense because they were no longer needed in a graphical user interface, and these line draw characters were replaced by accented characters. As a result several national codepages could be merged in regional codepages. The lower half of all codepages was still the same as in DOS, and most differences were in the upper half.

Microsoft decided that Windows would be using again different codepages. So they introduced the distinction between OEM Codepages and ANSI codepages. The original 437, 850 etc. were labeled as OEM codepages. Microsoft introduced new windows codepages such as 1252 (ANSI Latin ) and 1253 (ANSI Greek).

The biggest change compared to the original codepages in DOS was that the line draw characters were no longer there, which makes sense because they were no longer needed in a graphical user interface, and these line draw characters were replaced by accented characters. As a result several national codepages could be merged in regional codepages. The lower half of all codepages was still the same as in DOS, and most differences were in the upper half.

There are again really some changes. The Gamma has been moved to 0xC3 = 195.

There are again really some changes. The Gamma has been moved to 0xC3 = 195.

This was a challenge of course for the original developers of Visual Objects. They had to deal with ‘old’ data files and text files created in DOS and ‘new’ data files created in Windows.

Ansi and OEM in Visual Objects

One of the things introduced in the language to solve this was the ANSI setting of the Visual Objects language. With SetAnsi(TRUE) you were telling the runtime that you wanted to run in ‘Ansi’ mode and that any files you create should be written in Ansi format. With SetAnsi(FALSE) you told the VO runtime that you want to work in OEM mode.

The major area affected by this was the DBF access. There are a couple of possible scenarios:

- Reading a DBF file created in a Clipper application.

This file has a marker in the header with the value 0x03 or 0x83 indicating DBF without memo or with memo. The 0x03 also indicates that the file is in OEM format. When the SetAnsi setting in VO is true then VO will call a Windows function OemToChar when reading data and will call CharToOEM when writing data. As a result characters are mapped from the location in the DOS codepage to the windows codepage. With SetAnsi(FALSE) no translation is done. So the strings in memory will have the same binary values as the strings in the file.

- DBF files created in VO with SetAnsi(FALSE) will have the same 0x03 and 0x83 header bytes and will be compatible with DBF files created in Clipper.

- DBF files created in VO with SetAnsi(TRUE) will have a header byte 0x07 or 0x87, so one bit with the value 0x04 is set. This bit indicates that the file is Ansi encoded and no OEM translation will be done.

Important to realize is that the SetAnsi() flag is global and that mixing files with different settings will most likely cause problems.

Sorting in Visual Objects

For string comparisons VO introduced the so called Collation. With SetCollation(#Clipper) you can tell the runtime that you want Clipper compatible comparisons. VO uses Nation DLLs for this. These Nation DLLs contain the same tables that the original Clipper nation modules had. So if you app contained the code SetNationDLL(“Pol852.dll”) and SetCollation(#Clipper) then the strings would be sorted according to the Polish collation rules for codepage 852.

One potential problem when reading OEM files is that the Oem2Ansi and Ansi2OEM functions in windows do not allow you to specify which OEM codepage you want to convert to. The ANSI codepage is the codepage that matches the current Windows version. The OEM Codepage is a global setting in windows that is difficult to see and control. If you want to see what the OEM setting on your machine is, then the easiest way to do so is to open a command prompt and type CHCP. This should show the active OEM codepage.

When you work with Ansi files you are most likely better off by using the default SetCollation(#Windows). This will sort indexes using the built-in sorting routines from windows.

Unfortunately that is not enough in some scenarios. Especially in countries where more than language of windows is spoken and when different users can have different language versions of windows. The reason for that is that these different language versions of windows can have different sorting algorithms.

When SetCollation(#Windows) is used then Visual Objects uses a built in windows function to sort. This function takes a localeID as parameter which indicates how to sort. Visual Objects 2.8 introduced a runtime function that allows you to specify this localeID in code, so you can make sure that every program running your code uses the same locale. For example

to use the standard German Language in Phone Book sorting mode:

SetAppLocaleId(MAKELCID(MAKELANGID(LANG_GERMAN, SUBLANG_GERMAN),SORT_GERMAN_PHONE_BOOK))

to use the Swiss German Language in Default sorting mode:

SetAppLocaleId(MAKELCID(MAKELANGID(LANG_GERMAN, SUBLANG_GERMAN_SWISS),SORT_DEFAULT))

to use the Norwegian Bokmal language, default sorting mode:

SetAppLocaleId(MAKELCID(MAKELANGID(LANG_NORWEGIAN,SUBLANG_NORWEGIAN_BOKMAL),SORT_DEFAULT))

To use language independent sorting

SetAppLocaleId(MAKELCID(MAKELANGID(LANG_NEUTRAL,SUBLANG_NEUTRAL),SORT_DEFAULT))

With all these tools in place your VO app could both read and write Clipper files and also read and write files with Windows/Ansi encoding.

Single byte Ansi and Multi Byte Ansi

Since the character sets were based on 8 bit characters there was a problem with languages with more characters(or symbols), such as Chinese, Korean and Japanese. For these languages the so called Double Byte Character Sets (DBCS) were introduced. These are character sets where some characters are represented by 2 bytes. The mechanism behind all of this actually quite complex, but the simple version is that some characters in the upper half of the character set are like ‘doors’ into a second page of 256 characters. This actually works great and windows supports all of that. The top half of the characters (everything upto 127) is a “normal character”. The bottom half contains characters that act like a doorway to a table of the 'real characters'. These are called the leadbytes. The unicode website shows you how this works: http://demo.icu-project.org/icu-bin/convexp?conv=windows-936 If you click on the lead byte 0x81 in the codepage you will skip to the page where the characters are shown that all start with this leadbyte. So 0x8140 is the character 丂 and 0x8150 the character 丳. (I apologize if these are obscene or inappropriate characters, I really have no idea).

One of the problems from the programmers view is that you can no longer know the number of characters in a string based on the number of bytes in a string. Some characters are represented by multiple bytes. The owner of Computer Associates was Chinese and also wanted to prepare Visual Objects for the Chine market (the project was called Wen Deng) This is why the Visual Objects runtime was extended with functions like MBLen() and MBAt(). These functions return character positions or character lengths and no longer byte positions and byte lengths.

With the move to X# things have become easier and more complicated at the same time.

Introduction to X#

To start: X# is a .Net development language as you all know and .Net is a Unicode environment. Each character in a Unicode environment is represented by one (or sometimes more) 16 bit numbers. The Unicode character set allows to encode all known characters, so your program can display any combination of West European, East European, Asian, Arabic etc. characters. At least when you choose the right font. Some fonts only support a subset of the Unicode characters, for example only Latin characters and other fonts only Chinese, Korean or Japanese. The Unicode character set also has characters that represent the line draw characters that we know from the DOS world, and also various symbols and emoticons have a place in the Unicode character set.

You would probably expect that 64K characters should be enough to represent all known languages and that is indeed true. However because also emoticons and other (scientific symbols) have found their way into Unicode, even 16 bits is not enough. To make things even more complicated we now have many different skin color variations of emoticons. Being politically correct sometimes creates technical challenges !

The good thing is that the .Net runtimes knows how to handle Unicode and the X# runtime is created on top of the .Net runtime so it is fully Unicode for free!

The challenge comes when we want to read or write data created in applications that are or were not Unicode, such as Ansi or OEM DBF files, Ansi or OEM text files. Another challenge is code that talks to the Ansi Windows API, such as the VO GUI Classes, and the VO SQL Classes.

DBFs and Unicode

When you open a DBF inside X#, then the RDD system will inspect the header of the file to detect with which codepage the file was created. This codepage is stored in a single byte in the header (using yet another list of numbers). We detect this codepage and map it to the right windows codepage and use an instance of the Encoding class to convert the bytes in the DBF to the appropriate Unicode characters. When we write to a dbf then the RDD system will convert the Unicode characters to the appropriate bytes and write these bytes to the DBF. This works transparently and may only cause problems when you try to write characters that do not exist in the codepage for which the DBF was created. In these situations the conversion either writes an accented character as the same character without accent, or, when no proper translation can be done then a question mark byte will be written to the DBF. The nice thing about the way things are handled now is that you can read and write to dbf files created for different ansi codepages at the same time. However that may cause a problem with regard to indexes.

For the sorting and indexing of DBF files the runtime will use the same mechanism as VO. You can use the SetCollation() function and specify SetCollation(#Windows) to tell the RDD system to compare using the windows comparison routines or SetCollation(#Clipper) to compare using the weight tables built into the Runtime. Important to realize here is that these comparison routines will first convert the Unicode strings to Ansi (for SetCollation(#Windows) ) or OEM (for SetCollation(#Clipper)) and will then apply he same sorting rules that VO uses. So the Windows collation will also use the Applocale (that you can set with SetAppLocale()) so you can distinguish between for example German sorting and German Phonebook sorting. The Clipper collation will compare the strings using the weight table selected with SetNatDLL().

VO SDK and VO SQL and Unicode (and x86 & Anycpu)

The VO SDK and SQL Classes are written against the ANSI Windows API calls in Kernel32, User32, ODBC32 etc. This means 2 things:

- These class libraries are x86 only. They are full of code where pointers and 32 bit integers are mixed and can therefore not run in x64 mode

- These class libraries use String2Psz() and Cast2Psz() in VO and Psz2String() and where these functions do very little in VO (they simply allocate static memory and copy the bytes from one location to the other location.

Inside X# these functions do the same, but they also have to convert the Unicode strings to Ansi ( String2Psz and Cast2Psz) and back (Psz2String). For these conversion the runtime uses the Windows codepage that was read from the current workstation.

Important to realize is that the Ansi codepage from a DBF files can and sometimes will be different from the codepage from Windows. So it is possible to read a Greek DBF file and translate the characters to the correct Unicode strings. But if you want to display the contents of this file with the traditional Standard MDI application on a Western European windows, then you will still see ‘garbage’ because many of these Greek characters are not available in the Western European codepage. We recognize that the Ansi UI layer and Ansi SQL layer could be a problem for many people and we have already worked on GUI classes and SQL classes that are fully Unicode aware. As soon as the X# Runtime is stable enough we will add these classes libraries as a bonus option to the FOX subscribers version of X#. These class libraries have the same class names, property names and method names as the VO GUI and SQL Classes. Existing code (even painted windows) should work with little or no changes. We have also emulated the VO specific event model where events are handled at the form level with events such as EditChange, ListBoxSelect etc.

The GUI classes in this special library are created on top of Windows Forms and the SQL classes are created on top of Ado.NET. So these classes are not only fully Unicode but they are also AnyCPU.

Both of these class libraries will be “open”: For the GUI library will use a factory model where there is a factory that creates the underlying controls, panels and forms used by the GUI library. The base factory creates standard System.Windows.Forms objects. However you can also inherit from this factory and use 3rd party controls, such as the controls from Infragistics or DevExpress. All you have to do is to tell the GUI library that you want to use another factory class and of course implement the changes in the factory. And you can mix things if you want. For example replace the pushbuttons and textboxes and leave the rest standard System.Windows.Forms.

You may wonder why we have chosen to build this on Windows.Forms and not WPF even when Microsoft has told us that Windows.Forms is “dead”. The biggest reason is that it is much easier to create a compatible UI layer with Windows Forms. And fortunately Microsoft recently has announced that they will come with Windows.Forms for .Net Core 3. The first demos show that this works very well and is much faster. So the future for Windows.Forms looks promising again !

In a similar way we have created a Factory for the SQL Classes. We will deliver a version with 3 different SQL factories, based on the three standard Ado.Net dataproviders: ODBC, OLEDB and SqlClient.

You should be able to subclass the factory and use any other Ado.Net dataprovider, such as a provider for Oracle or MySql. Maybe we will find a sponsor and will include these factories as well. The SQL factories will also allow you to inspect and modify readers, commands, connections etc. before and after they are opened.

Sorting and comparing strings in your code, the compiler option /vo13 and SetExact()

Creating indexes and sorting files is not the only place in your code where string sorting algorithms are used. They are also used in your code when you compare two strings to determine which us greater (string1 ⇐ string2) , when you compare 2 strings for equality ( string1 = string2, string1 == string2) and in code such as ASort(). One factor that makes this extra complicated is that quite often the compiler cannot recognize that you are comparing strings because the strings are “hidden” inside a usual. The compiler has no idea for code like usual1 ⇐ usual2 what the values can be at runtime. You could be comparing 2 dates, 2 integers, 2 strings, 2 symbols, 2 floats or even a mixture (like an integer with a float). In these cases the compiler will simply produce code that compares 2 usuals (by calling an operator method on the usual type) and “hopes” that you are not comparing apples and pears. When you do, you will receive a runtime error.

But lets start simple and assume you are comparing 2 strings. To achieve the same results in X# as in your Ansi VO App we have added a compiler option /vo13 which tells the compiler to use “compatible string comparisons”. When you use this compiler option then the compiler will call a function in the X# runtime (called __StringCompare) to do the actual string comparison. This function will use the same comparison algorithm that is used for DBF files. So it follows the SetCollation() setting and will convert the strings to Ansi or Unicode and use the same routines that VO does. This should produce exactly the same results as your VO app. Of course it will not know how to compare characters that are not available in the Ansi codepage. So if you have 2 strings with for example difference Chinese characters and use compatible comparisons to compare them, then it is very well possible that they are seen as “the same” because the differences are lost when converting the Unicode characters to Ansi. They might both be translated to a string of one or more questionmarks.

If you compile your code without /vo13 then the compiler will not use the X# runtime function to compare strings but will insert a call to System.String.Compare() to compare the strings. Important to realize is that this function does NOT use the SetExact() logic. So where with SetExact(FALSE) the comparison “Aaaa” = “A” would return TRUE with compatible string comparisons, it will return FALSE when the noncompatible comparisons are used. On top of that when you use the < or > operator to compare strings then you have to know how the .Net sorting for characters is, and that sorting algorithm differs per “culture”. If you look at the .Net documentation for StringCompare() you will see that there are various overloads, and that for some of these you can specify the kind of StringComparison with an enum with values like CurrentCulture, Ordinal and also combinations that ignore the case of the string. Simply said: there is no easy way to compare strings. That is one of the reasons that a language like C# does not allow you to compare 2 strings with a < or > operator. There is too much that can go wrong.

If you compare a String with a USUAL or 2 USUALS then the compiler cannot know how to compare the strings . In that case the code produced by the compiler will convert the string to a usual and call the comparison routine for USUALs. Unfortunately, when we wrote the runtime we did not know what your compiler option of choice would be, so the runtime does not know what to do. To solve this problem we use the following solution: In the startup code of your application the compiler adds a (compiler generated) line of code that saves the setting of the /vo13 option in the so called “runtimestate”. The code inside the runtime that compares 2 usuals will check this setting and will either call the “plain” String.Compare() or the compatible __StringCompare(). Important to realize is that when your app consists of more than one assembly it becomes important to use the setting of /vo13 consistently over all of your assemblies and main app. Mixing this option can easily lead to problems that are difficult to find. It can become even more complicated if you are using 3rd party components especially if you don’t have the source. That is why we recommend that 3rd party developers always compile with /vo13 ON. You may think that that would produce problems if the rest of the components is compiled with /vo13 off. But rest assured, this is a scenario that we have foreseen: inside the __StringCompare() code that is called when /vo13 is enabled there is a check for the same vo13 option in the runtimestate. So if the function detects that the main app is running with /vo13 off, then the function will call the same String.Compare() code that the compiler would have used otherwise.

SetCollation

We have already mentioned SetCollation() before. In the X# runtime we have added 2 new possible values for SetCollation(): #Unicode and #Ordinal. These 2 new collations can be used in code that wants to use the “old” Clipper behavior with regard to Exact and Non-Exact string comparisons (in other words, wants to only compare upto the length of the string on the right hand side of the comparison) but still want to use Unicode or Ordinal string comparisons. To use this, compile your code with /vo13 ON and set the collation at startup. If you choose SetCollation(#Unicode) then String.Compare() will be used to compare the strings. If you use SetCollation(#Ordinal) then String.CompareOrdinal() will be used. Both of these will only be used when the string on the right side has the same length or is longer than the left side. If the string on the right side is the shortest then an ordinal compare will be done on the number of characters in the string. The Ordinal comparison is the fastest of these options, and is NOT culture dependent. The #Unicode comparison is culture dependent and faster then the #Windows and #Clipper collations, mostly because these last 2 have to convert from Unicode to 8bit values.

Handling Binary data and Unicode

Many developers have stored binary data (such as passwords) in DBF files or have read or written binary files in their VO applications using functions such as MemoRead() and MemoWrit(). That will NOT work reliably in X# (or any other Unicode application).

Let’s start with binary data (like passwords) in DBF files. Encryption algorithms in VO would usually produce a list of fairly random 8 bit values, with all possible combinations of bits. The Crypt() function in the runtime does that. To produce the same result as the VO encode it converts the Unicode string to 8 bits, runs the encoding algorithm and converts the result back to Unicode. When you then write that string to the DBF it will have to become 8 bits again. Especially if the DBF is OEM this will produce a problem. To work around this we will add special version of the Crypt function to the runtime that accept or return an array of bytes and we will also add functions to the runtime that allow you to write these bytes directly to the DBF without any conversions. This should help you to avoid conversions that may corrupt your encrypted passwords. Of course you can also use other techniques, such as base encoding the passwords, so the result will always be a string where the values are never interpreted as special characters.

Something similar may happen if you use memoread/memowrit.

Memoread in the X# runtime will use a Framework function that is “smart” enough to detect if the source file is a Ansi text file or Unicode text file (by looking at the possible Byte Order mark at the beginning of the text file). It can also detect if a text file uses UTF encoding. The result is that MemoRead can read all kinds of text files and retrieve its contents in a .Net Unicode string.

If you use a function like MemoRead() to read the contents of for example a Word or Excel file the runtime will have no idea how to translate this from bytes into Unicode. It will produce a Unicode string, but it is most likely not a correct representation of the bytes in the file. When you use MemoWrit() to write the string back to a file then we have to do a revers conversion. Our experience is that this quite often corrupts the contents. MemoRead() uses System.IO.File.ReadAllText() and MemoWrit() uses System.IO.File.WriteAllText() btw.

If you really want to deal with binary data then we recommend that you have a look at the .Net methods System.IO.File.ReadAllBytes() and System.IO.File.WriteAllBytes(). These functions return or accept (as the name implies) an array of bytes. No conversion will be done.

Summary

Adding Unicode support to your application makes your application much more powerfull. You are able to mix characters from different cultures. If you want to keep on using “old” Ansi and/or OEM files, you will have to make sure that you configure your application correctly.

Unfortunately there is no universal best solution. You will have to evaluate your application and decide yourself what is good for you. Of course we are available on our forums to help you make that decision.

Original article URLs: www.xsharp.info/articles/blog/x-strings-and-codepages-part-1 www.xsharp.info/articles/blog/x-strings-and-codepages-part-3